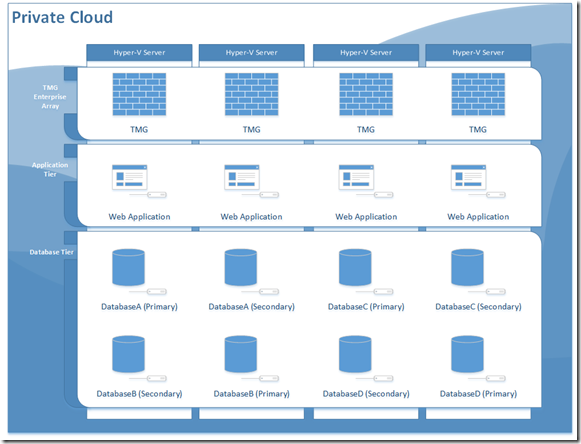

The following describes a high availability architecture for Forefront TMG within Hyper-V for IaaS Private Cloud supporting an web application tier and data storage tier with optimal path section (OPS).

Scenario

In this scenario I will use 4 identical Microsoft Hyper-V servers each with a LACP LAG tagging all VLANs to the Hyper-V constructing a virtual switch whereby each VM network interface is tagged to a VLAN ID.

Construct a TMG enterprise array placing a virtual TMG server on each Hyper-V host, you will need to provide each TMG a minimum of two network interfaces (LAN & WAN), give each VM 8GB of memory and 4 virtual processors reserving 100% for the CPU reservation. Next configure the TMG enterprise with a unicast NLB on each network interface creating bi-directional affinity. Note: real client traffic (IPs) will need to be able to route to the WAN interface on the TMG enterprise array.

Construct an application tier farm placing a virtual application server on each Hyper-V host, you will need to define the default gateway for each TMG server to be the primary NLB IP address on the LAN interface defined on the TMG enterprise array.

Construct a data tier farm placing a virtual database server on each Hype-V host. I recommend using database mirroring or AlawaysOn availability architecture to obtain application resiliency in favour of clustering, you will need to define the default gateway for each TMG server to be the primary NLB IP address on the LAN interface defined on the TMG enterprise array.

Deploy your IaaS database solution in your data tier farm, for example deploying multiple databases across with mirrors across all of your database servers.

Deploy your SaaS application solution in your application tier farm.

Behaviour

To recap you have deployed a TMG NLB in front of a web farm that uses a database tier backend comprised of multiple SQL servers, but the active database may only exist on one database server.

As you can see an external request is sent to TMG then load balanced out to one of the web application servers which is then routed to a database server. By default traffic is randomly distributed between one or all Hyper-V Hosts.

Problem

How to optimally route requests with affinity in TMG to the Web Application server where the primary database resides so that communication between the application and database don’t transmitting the physical IP network but end up transmitting over the hypervisor network at 10gb with zero latency.

Solution

Configure your web application with application request routing in a workflow to instrument the first request with affinity to sort out the shortest path then set the client origin IP affinity to get routed in the TMG enterprise array to the TMG server that contains the primary database and web farm affinity to send additional requests over time to the web application server that resides on the same server as the primary database.

The result is over time an NLB affinity cache is built where a client request automatically gets routed to the fastest communication path for TMG, web application server and the primary database while reducing traffic on your physical network using Optimal Path Selection (OPS).